Reporting guidelines

This page evaluates the extent to which the journal article meets the criteria from two discrete-event simulation study reporting guidelines:

- Monks et al. (2019) - STRESS-DES: Strengthening The Reporting of Empirical Simulation Studies (Discrete-Event Simulation) (Version 1.0).

- Zhang, Lhachimi, and Rogowski (2020) - The generic reporting checklist for healthcare-related discrete event simulation studies derived from the the International Society for Pharmacoeconomics and Outcomes Research Society for Medical Decision Making (ISPOR-SDM) Modeling Good Research Practices Task Force reports.

Note: In completing this evaluation for Kim et al. (2021), we sometimes refer to prior publications the initially described the model. These are:

- Glover et al. (2018) - “Discrete Event Simulation for Decision Modeling in Health Care: Lessons from Abdominal Aortic Aneurysm Screening”

- Thompson et al. (2018) - “Screening Women Aged 65 Years or over for Abdominal Aortic Aneurysm: A Modelling Study and Health Economic Evaluation”

STRESS-DES

Of the 24 items in the checklist:

- 15 were met fully (✅)

- 5 were partially met (🟡)

- 2 were not met (❌)

- 2 were not applicable (N/A)

| Item | Recommendation | Met by study? | Evidence |

|---|---|---|---|

| Objectives | |||

| 1.1 Purpose of the model | Explain the background and objectives for the model | ✅ Fully | Introduction - Abdominal Aortic Aneurysm (AAA) “screening (including surveillance) in most areas of the UK was paused during the lockdown due to concerns about COVID-19 transmission”… this study uses a previously developed and validated model “to explore different approaches to post-lockdown service resumption”Kim et al. (2021) |

| 1.2 Model outputs | Define all quantitative performance measures that are reported, using equations where necessary. Specify how and when they are calculated during the model run along with how any measures of error such as confidence intervals are calculated. | ✅ Fully | Methods: Post-COVID-19 policy scenarios - “Total numbers of AAA- related deaths, operations (both elective and emergency) and ruptures over the whole follow- up period are recorded for each model. These clinical results are reported as percentage change from the status quo as well as expected increase in number of events when scaled to the popu- lation of England”Kim et al. (2021) |

| 1.3 Experimentation aims | If the model has been used for experimentation, state the objectives that it was used to investigate. (A) Scenario based analysis – Provide a name and description for each scenario, providing a rationale for the choice of scenarios and ensure that item 2.3 (below) is completed. (B) Design of experiments – Provide details of the overall design of the experiments with reference to performance measures and their parameters (provide further details in data below). (C) Simulation Optimisation – (if appropriate) Provide full details of what is to be optimised, the parameters that were included and the algorithm(s) that was be used. Where possible provide a citation of the algorithm(s). |

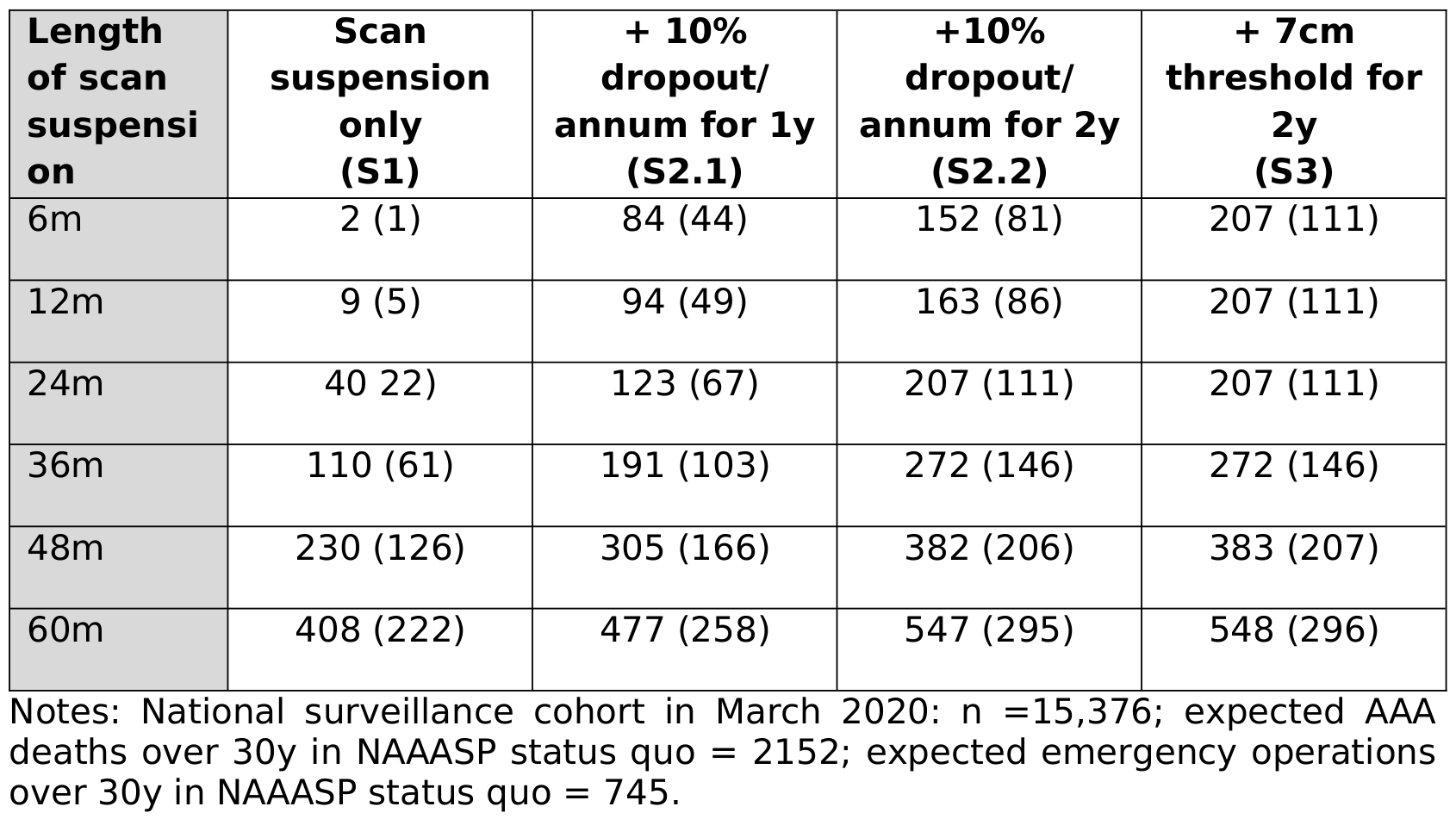

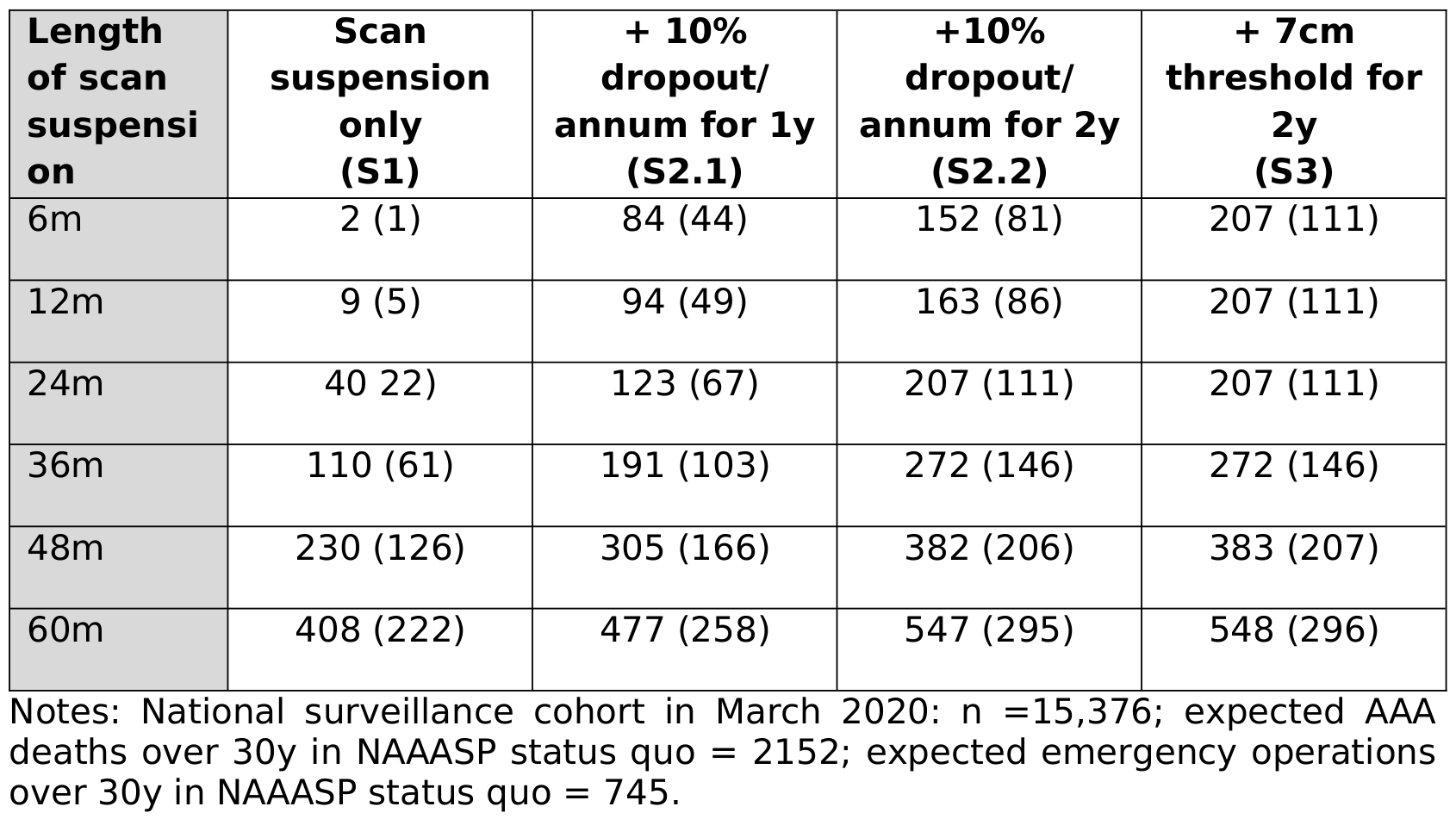

✅ Fully | Primary scenarios clearly described in Table 1.Supplementary scenarios not described as clearly, but can be easily understood from supplementary table 2, regardless.  Kim et al. (2021) |

| Logic | |||

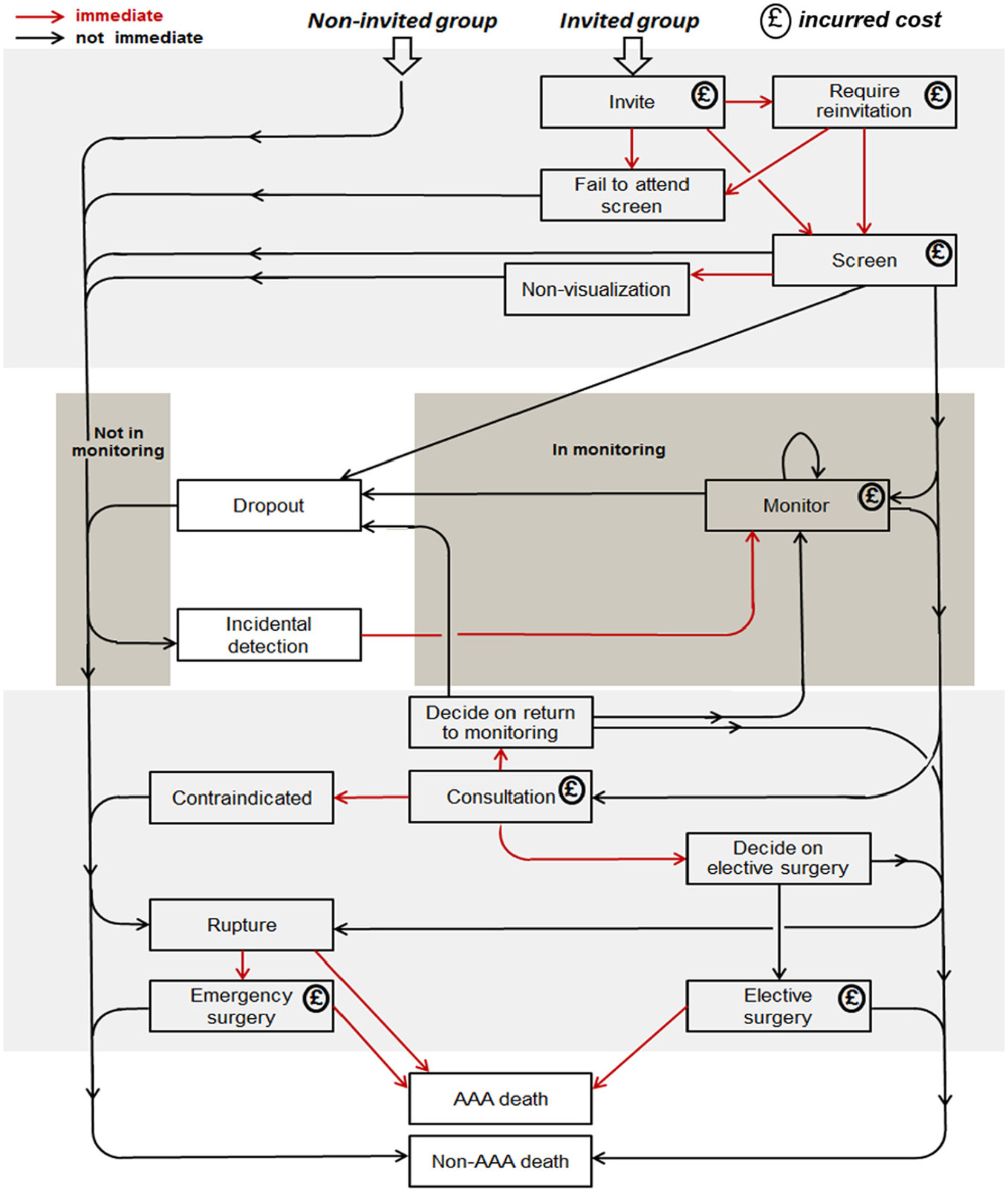

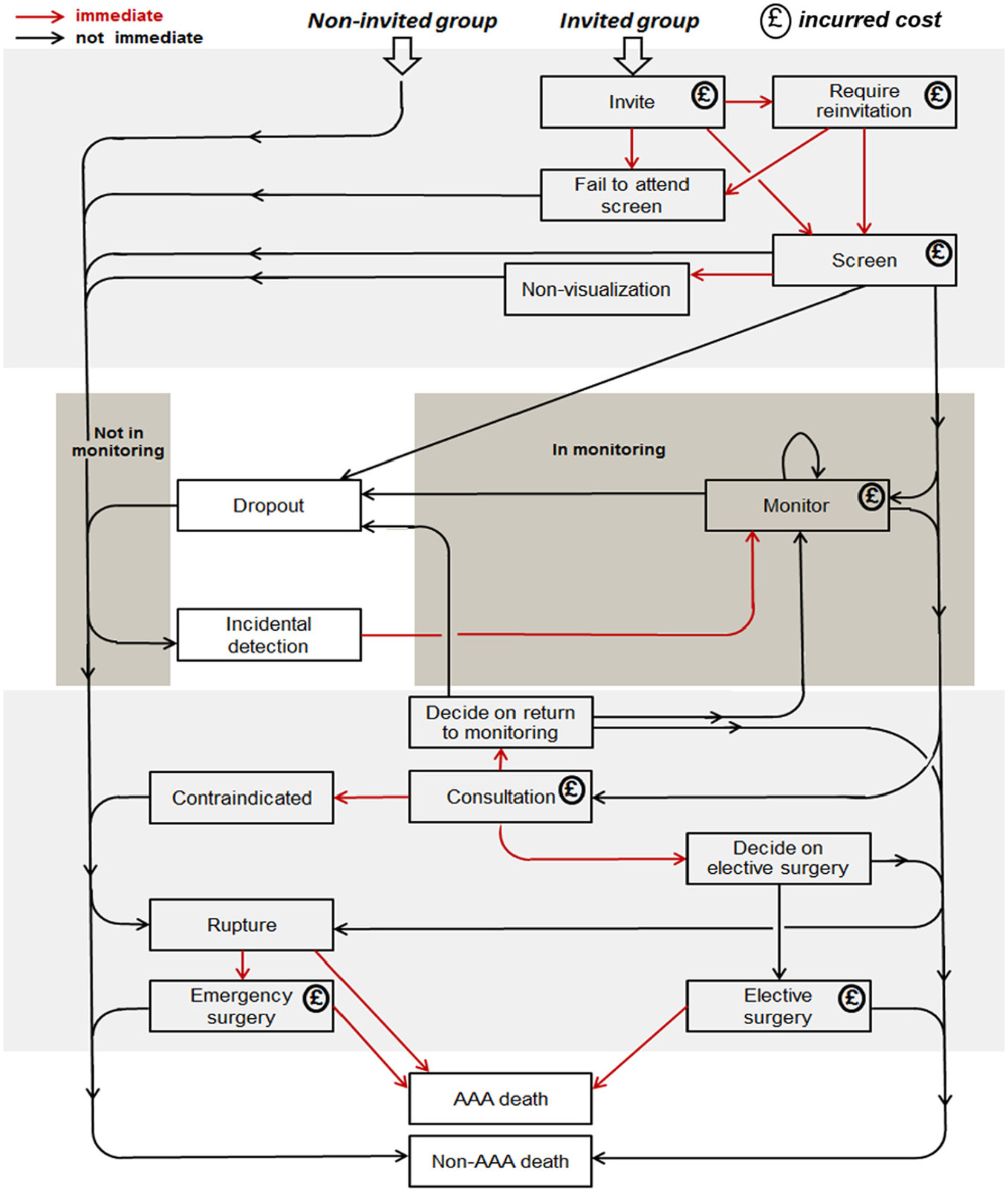

| 2.1 Base model overview diagram | Describe the base model using appropriate diagrams and description. This could include one or more process flow, activity cycle or equivalent diagrams sufficient to describe the model to readers. Avoid complicated diagrams in the main text. The goal is to describe the breadth and depth of the model with respect to the system being studied. | ✅ Fully | Methods: Model - “The full pathway reflecting both the natural history and screening programme is described in detail elsewhere” (Kim et al. (2021)), citing Glover et al. (2018) and Thompson et al. (2018) Glover et al. (2018) has a flow diagram (Figure 1).  Some modifications to the model are described in Kim et al. (2021), but the figure from Glover et al. (2018) is considered to still be reasonably applicable (due to the level of detail of the figure) |

| 2.2 Base model logic | Give details of the base model logic. Give additional model logic details sufficient to communicate to the reader how the model works. | ✅ Fully | Methods: Model - “The full pathway reflecting both the natural history and screening programme is described in detail elsewhere” (Kim et al. (2021)), citing Glover et al. (2018) and Thompson et al. (2018). Then, it provides a simple description of the model, and how it differs from Glover et al. (2018). |

| 2.3 Scenario logic | Give details of the logical difference between the base case model and scenarios (if any). This could be incorporated as text or where differences are substantial could be incorporated in the same manner as 2.2. | ✅ Fully | Described in the text, and main scenarios clearly presented in Table 1.Kim et al. (2021) |

| 2.4 Algorithms | Provide further detail on any algorithms in the model that (for example) mimic complex or manual processes in the real world (i.e. scheduling of arrivals/ appointments/ operations/ maintenance, operation of a conveyor system, machine breakdowns, etc.). Sufficient detail should be included (or referred to in other published work) for the algorithms to be reproducible. Pseudo-code may be used to describe an algorithm. | 🟡 Partially | Not described in Kim et al. (2021). Glover et al. (2018) provides some (but not all) of the sampling distributions used. An excerpt: “The DES involves a large number of parameters. These can be classified into several sets: global fixed parameters, global uncertain parameters, and parameters that are specific to an individual or a pair of twins (“global” refers to population parameters and “uncertain” means that a parameter follows a random distribution). Like the functions, these sets form a hierarchy. For example, in a PSA, a beta distribution is used to generate the probability that an individual will die following emergency surgery, if they have emergency surgery…“ Glover et al. (2018) |

| 2.5.1 Components - entities | Give details of all entities within the simulation including a description of their role in the model and a description of all their attributes. | ✅ Fully | Methods: Model - “The original DES model simulated events for a new cohort at a given age (e.g. 65) from the time of invitation to screening up to their date of death or age 95 (the time horizon). The repurposed DES model is extended here to allow events to be simulated from a cohort of indi- viduals already under surveillance in the NAAASP, through simulation of key characteristics (age and aortic diameter) at the inception of the model (“time zero”), which is taken to be March 2020 when the initial UK national “lockdown” was imposed.”Kim et al. (2021) |

| 2.5.2 Components - activities | Describe the activities that entities engage in within the model. Provide details of entity routing into and out of the activity. | ✅ Fully | Not described in Kim et al. (2021). Glover et al. (2018) provides a detailed description of the model in the Methods and with Figure 1, from which we can identify activities. For example, Methods: Development of a Simulation Model - “events (rupture, surgical consultations, elective and emergency AAA repair, death)” |

| 2.5.3 Components - resources | List all the resources included within the model and which activities make use of them. | N/A | No description in Kim et al. (2021) or Glover et al. (2018) but, from what I understand of the model, I don’t think there are “resources” as such. |

| 2.5.4 Components - queues | Give details of the assumed queuing discipline used in the model (e.g. First in First Out, Last in First Out, prioritisation, etc.). Where one or more queues have a different discipline from the rest, provide a list of queues, indicating the queuing discipline used for each. If reneging, balking or jockeying occur, etc., provide details of the rules. Detail any delays or capacity constraints on the queues. | N/A | No description in Kim et al. (2021) or Glover et al. (2018) but, from what I understand of the model, I don’t think there are queues. |

| 2.5.5 Components - entry/exit points | Give details of the model boundaries i.e. all arrival and exit points of entities. Detail the arrival mechanism (e.g. ‘thinning’ to mimic a non-homogenous Poisson process or balking) | ✅ Fully | Methods: Model - entities are followed “at a given age (e.g. 65) from the time of invitation to screening up to their date of death or age 95 (time horizon)”Kim et al. (2021) |

| Data | |||

| 3.1 Data sources | List and detail all data sources. Sources may include: • Interviews with stakeholders, • Samples of routinely collected data, • Prospectively collected samples for the purpose of the simulation study, • Public domain data published in either academic or organisational literature. Provide, where possible, the link and DOI to the data or reference to published literature. All data source descriptions should include details of the sample size, sample date ranges and use within the study. |

✅ Fully | Provided for each input in Supplementary Table 1 |

| 3.2 Pre-processing | Provide details of any data manipulation that has taken place before its use in the simulation, e.g. interpolation to account for missing data or the removal of outliers. | ✅ Fully | Some processing is described in Supplementary Table 1 |

| 3.3 Input parameters | List all input variables in the model. Provide a description of their use and include parameter values. For stochastic inputs provide details of any continuous, discrete or empirical distributions used along with all associated parameters. Give details of all time dependent parameters and correlation. Clearly state: • Base case data • Data use in experimentation, where different from the base case. • Where optimisation or design of experiments has been used, state the range of values that parameters can take. • Where theoretical distributions are used, state how these were selected and prioritised above other candidate distributions. |

🟡 Partially | Inputs provided in Supplementary Table 1. Distributions are not provided. In the seminal paper for this model (Glover et al. (2018)), they describe some but not all of the distributions. |

| 3.4 Assumptions | Where data or knowledge of the real system is unavailable what assumptions are included in the model? This might include parameter values, distributions or routing logic within the model. | ✅ Fully | Has a section addressing this - Discussion: Modelling assumptions |

| Experimentation | |||

| 4.1 Initialisation | Report if the system modelled is terminating or non-terminating. State if a warm-up period has been used, its length and the analysis method used to select it. For terminating systems state the stopping condition. State what if any initial model conditions have been included, e.g., pre-loaded queues and activities. Report whether initialisation of these variables is deterministic or stochastic. |

🟡 Partially | Not described in Kim et al. (2021). Glover et al. (2018) mentions that events “occur as a continuous process over time” (i.e. non-terminating). Glover et al. (2018) I did not feel I could clearly identify, from either paper, whether a warm-up or initialisation conditions were used. |

| 4.2 Run length | Detail the run length of the simulation model and time units. | ✅ Fully | Methods: Post-COVID-19 policy scenarios - “THe models are run for a period of 30 years”Kim et al. (2021) |

| 4.3 Estimation approach | State the method used to account for the stochasticity: For example, two common methods are multiple replications or batch means. Where multiple replications have been used, state the number of replications and for batch means, indicate the batch length and whether the batch means procedure is standard, spaced or overlapping. For both procedures provide a justification for the methods used and the number of replications/size of batches. | ✅ Fully | Methods: Post-COVID-19 policy scenarios - “Each scenario model is run for 10 million hypothetical individuals… Model convergence is summarised using cumulative results from consecutive sub-runs each of 1 million individuals” (Supplementary Figure 1, Supplementary Figure 2) |

| Implementation | |||

| 5.1 Software or programming language | State the operating system and version and build number. State the name, version and build number of commercial or open source DES software that the model is implemented in. State the name and version of general-purpose programming languages used (e.g. Python 3.5). Where frameworks and libraries have been used provide all details including version numbers. |

🟡 Partially | Mentions that it uses R and a version. Doesn’t mention packages or their versions.Methods: Model - “model has previously been developed in R version 3.6.3”Kim et al. (2021) |

| 5.2 Random sampling | State the algorithm used to generate random samples in the software/programming language used e.g. Mersenne Twister. If common random numbers are used, state how seeds (or random number streams) are distributed among sampling processes. |

❌ Not met | Couldn’t spot in Kim et al. 2021 (or in Glover et al. 2018). |

| 5.3 Model execution | State the event processing mechanism used e.g. three phase, event, activity, process interaction. Note that in some commercial software the event processing mechanism may not be published. In these cases authors should adhere to item 5.1 software recommendations. State all priority rules included if entities/activities compete for resources. If the model is parallel, distributed and/or use grid or cloud computing, etc., state and preferably reference the technology used. For parallel and distributed simulations the time management algorithms used. If the HLA is used then state the version of the standard, which run-time infrastructure (and version), and any supporting documents (FOMs, etc.) |

❌ Not met | Could not identify event processing mechanism in Kim et al. (2021) or Glover et al. (2018). Glover et al. (2018) mention that the code “written to run in parallel”, but not the technology used (hence, marked as not met). From my understanding of the model, there are not queues so priority rules not relevant. |

| 5.4 System specification | State the model run time and specification of hardware used. This is particularly important for large scale models that require substantial computing power. For parallel, distributed and/or use grid or cloud computing, etc. state the details of all systems used in the implementation (processors, network, etc.) | 🟡 Partially | Not described in Kim et al. (2021). Glover et al. (2018) mentions that “run time was in the region of 24 h to run the model with 500,000 patients and 1,000 PSA iterations, even with parallelization and the use of a high-powered compute”. |

| Code access | |||

| 6.1 Computer model sharing statement | Describe how someone could obtain the model described in the paper, the simulation software and any other associated software (or hardware) needed to reproduce the results. Provide, where possible, the link and DOIs to these. | ✅ Fully | Data Availability Statement: “The DES model used in this work is available on a GitHub repository https://github.com/mikesweeting/AAA_DES_model” |

DES checklist derived from ISPOR-SDM

Of the 18 items in the checklist:

- 12 were met fully (✅)

- 0 were partially met (🟡)

- 5 were not met (❌)

- 1 were not applicable (N/A)

| Item | Assessed if… | Met by study? | Evidence/location |

|---|---|---|---|

| Model conceptualisation | |||

| 1 Is the focused health-related decision problem clarified? | …the decision problem under investigation was defined. DES studies included different types of decision problems, eg, those listed in previously developed taxonomies. | ✅ Fully | Introduction - explained in detail - e.g. COVID-19 disrupted “repair of Abdominal Aortic Aneurysms (AAA). Furthermore, AAA screening (including surveillance) in most areas of the UK was paused during the lockdown due to concerns about COVID-19 transmission… Ruptured AAA carries a high mortality and screening for AAA is offered to men in their 65th year throughout England via the NHS Abdominal Aortic Aneurysm Screening Program (NAAASP)…”. This explores “different approaches to post-lockdown service resumption” |

| 2 Is the modeled healthcare setting/health condition clarified? | …the physical context/scope (eg, a certain healthcare unit or a broader system) or disease spectrum simulated was described. | ✅ Fully | Introduction - nationwide, e.g. “NHS Abdominal Aortic Aneurysm Screening Porgram (NAASP)”Kim et al. (2021) |

| 3 Is the model structure described? | …the model’s conceptual structure was described in the form of either graphical or text presentation. | ✅ Fully | Methods: Model - “The full pathway reflecting both the natural history and screening programme is described in detail elsewhere” (Kim et al. (2021)), citing Glover et al. (2018) and Thompson et al. (2018) Glover et al. (2018) has a flow diagram (Figure 1).  Kim et al. (2021) provides a simple description of the model, and how it differs from Glover et al. (2018). |

| 4 Is the time horizon given? | …the time period covered by the simulation was reported. | ✅ Fully | Methods: Post-COVID-19 policy scenarios - “The models are run for a period of 30 years”Kim et al. (2021) |

| 5 Are all simulated strategies/scenarios specified? | …the comparators under test were described in terms of their components, corresponding variations, etc | ✅ Fully | Primary scenarios clearly described in Table 1.Supplementary scenarios not described as clearly, but can be easily understood from supplementary table 2, regardless.  Kim et al. (2021) |

| 6 Is the target population described? | …the entities simulated and their main attributes were characterized. | ✅ Fully | Introduction - e.g. “Circa 300,000 men are offered screening annually, of whom around 1% are found to have an AAA, whilst approximately 15,000 men are currently under surveillance in the programme.”Kim et al. (2021) |

| Paramaterisation and uncertainty assessment | |||

| 7 Are data sources informing parameter estimations provided? | …the sources of all data used to inform model inputs were reported. | ✅ Fully | Provided for each input in Supplementary Table 1 |

| 8 Are the parameters used to populate model frameworks specified? | …all relevant parameters fed into model frameworks were disclosed. | ✅ Fully | Inputs provided in Supplementary Table 1. |

| 9 Are model uncertainties discussed? | …the uncertainty surrounding parameter estimations and adopted statistical methods (eg, 95% confidence intervals or possibility distributions) were reported. | ❌ Not met | No - only presents counts or percentage change. |

| 10 Are sensitivity analyses performed and reported? | …the robustness of model outputs to input uncertainties was examined, for example via deterministic (based on parameters’ plausible ranges) or probabilistic (based on a priori-defined probability distributions) sensitivity analyses, or both. | ❌ Not met | None mentioned in paper. |

| Validation | |||

| 11 Is face validity evaluated and reported? | …it was reported that the model was subjected to the examination on how well model designs correspond to the reality and intuitions. It was assumed that this type of validation should be conducted by external evaluators with no stake in the study. | ❌ Not met | I couldn’t spot any in this paper or the prior study. |

| 12 Is cross validation performed and reported | …comparison across similar modeling studies which deal with the same decision problem was undertaken. | ✅ Fully | Discussion: Strengths and limitations - “DES has been well validated against… a previous Markov model, producing reliable estimates of events over the trial follow-up.”In the discussion, they compare against a “recent modelling study in the United States [that] explored the trade-off between COVID-19 mortality and AAA-related mortality” Kim et al. (2021) |

| 13 Is external validation performed and reported? | …the modeler(s) examined how well the model’s results match the empirical data of an actual event modeled. | ✅ Fully | Discussion: Strengths and limitations - “DES has been well validated against data from the Multicentre Aneurysm Screening Study”Kim et al. (2021) |

| 14 Is predictive validation performed or attempted? | …the modeler(s) examined the consistency of a model’s predictions of a future event and the actual outcomes in the future. If this was not undertaken, it was assessed whether the reasons were discussed. | N/A | Only relevant to forecasting studies. |

| Generalisability and stakeholder involvement | |||

| 15 Is the model generalizability issue discussed? | …the modeler(s) discussed the potential of the resulting model for being applicable to other settings/populations (single/multiple application). | ❌ Not met | Couldn’t spot anything in the paper. |

| 16 Are decision makers or other stakeholders involved in modeling? | …the modeler(s) reported in which part throughout the modeling process decision makers and other stakeholders (eg, subject experts) were engaged. | ❌ Not met | Couldn’t spot anything in this paper or the prior study. |

| 17 Is the source of funding stated? | …the sponsorship of the study was indicated. | ✅ Fully | “This work was supported by core funding from: the UK Medical Research Council (MR/L003120/1), the British Heart Foundation (RG/13/13/30194) and the NIHR Cambridge Biomedical Research Centre (BRC) [The views expressed are those of the author(s) and not necessarily those of the NIHR or the Department of Health and Social Care]. LGK is funded by the NIHR Blood and Transplant Research Unit in Donor Health and Genomics (NIHR BTRU-2014-10024). SCH is funded by an MRC CARP Fellowship (Mr/T023783/1). https://mrc.ukri.org/ https://www.bhf.org.uk/for-professionals https://cambridgebrc.nihr.ac.uk/ http://www.donorhealth-btru.nihr.ac.uk/. The sponsors and funders played no role in study design. data collection, decision to publish or preparation of the manuscript.” Kim et al. (2021) |

| 18 Are model limitations discussed? | …limitations of the assessed model, especially limitations of interest to decision makers, were discussed. | ✅ Fully | Discussion: Strengths and limitations - e.g. “There are a number of simplifications relating to model structure that were necessary for carrying out this COVID-19-related modelling work… In addition to these structural assumptions, there are also challenges associated with extrapolating the underlying models of AAA growth and rupture rates to this setting…” |

References

Glover, Matthew J., Edmund Jones, Katya L. Masconi, Michael J. Sweeting, Simon G. Thompson, Janet T. Powell, Pinar Ulug, and Matthew J. Bown. 2018. “Discrete Event Simulation for Decision Modeling in Health Care: Lessons from Abdominal Aortic Aneurysm Screening.” Medical Decision Making 38 (4): 439–51. https://doi.org/10.1177/0272989X17753380.

Kim, Lois G., Michael J. Sweeting, Morag Armer, Jo Jacomelli, Akhtar Nasim, and Seamus C. Harrison. 2021. “Modelling the Impact of Changes to Abdominal Aortic Aneurysm Screening and Treatment Services in England During the COVID-19 Pandemic.” PLOS ONE 16 (6): e0253327. https://doi.org/10.1371/journal.pone.0253327.

Monks, Thomas, Christine S. M. Currie, Bhakti Stephan Onggo, Stewart Robinson, Martin Kunc, and Simon J. E. Taylor. 2019. “Strengthening the Reporting of Empirical Simulation Studies: Introducing the STRESS Guidelines.” Journal of Simulation 13 (1): 55–67. https://doi.org/10.1080/17477778.2018.1442155.

Thompson, Simon G, Matthew J Bown, Matthew J Glover, Edmund Jones, Katya L Masconi, Jonathan A Michaels, Janet T Powell, Pinar Ulug, and Michael J Sweeting. 2018. “Screening Women Aged 65 Years or over for Abdominal Aortic Aneurysm: A Modelling Study and Health Economic Evaluation.” Health Technology Assessment 22 (43): 1–142. https://doi.org/10.3310/hta22430.

Zhang, Xiange, Stefan K. Lhachimi, and Wolf H. Rogowski. 2020. “Reporting Quality of Discrete Event Simulations in Healthcare—Results From a Generic Reporting Checklist.” Value in Health 23 (4): 506–14. https://doi.org/10.1016/j.jval.2020.01.005.