Reporting guidelines

This page evaluates the extent to which the journal article meets the criteria from two discrete-event simulation study reporting guidelines:

- Monks et al. (2019) - STRESS-DES: Strengthening The Reporting of Empirical Simulation Studies (Discrete-Event Simulation) (Version 1.0).

- Zhang, Lhachimi, and Rogowski (2020) - The generic reporting checklist for healthcare-related discrete event simulation studies derived from the the International Society for Pharmacoeconomics and Outcomes Research Society for Medical Decision Making (ISPOR-SDM) Modeling Good Research Practices Task Force reports.

STRESS-DES

Of the 24 items in the checklist:

- 14 were met fully (✅)

- 5 were partially met (🟡)

- 4 were not met (❌)

- 1 was not applicable (N/A)

| Item | Recommendation | Met by study? | Evidence |

|---|---|---|---|

| Objectives | |||

| 1.1 Purpose of the model | Explain the background and objectives for the model | ✅ Fully | Introduction: “Endovascular clot retrieval (ECR) is the first-line treatment for acute ischemic stroke (AIS) due to arterial large vessel occlusion (LVO) with several trials demonstrating its efficacy in reducing mortality and morbidity (1–3). However, ECR is considerably more costly than traditional care (4), with estimated procedure costs ranging between 9,000 and 14,000 US dollars per patient (4, 5). Major expenditure is required for capital equipment such as angiography equipment purchase and maintenance. Staffing must be adequate to deliver a 24/7 rapid response service. Government funding agencies seek to optimize return on investment, such as that on resources allocated to acute stroke services. In contrast to other healthcare fields, a resource-use optimization model has not been implemented for comprehensive stroke services.”Huang et al. (2019) |

| 1.2 Model outputs | Define all quantitative performance measures that are reported, using equations where necessary. Specify how and when they are calculated during the model run along with how any measures of error such as confidence intervals are calculated. | ✅ Fully | Outcome Measures: “We examined two outcome measures in this model: the patient wait time and resource utilization rate. “Patient wait time” is the time spent queuing for a resource. “Resource utilization rate” represents the median occupancy rate.”Statistics and software: “To facilitate graphical and descriptive comparison across models, we express waiting times as relative probabilities of waiting a given amount of time, compared to not waiting at all.”Huang et al. (2019) |

| 1.3 Experimentation aims | If the model has been used for experimentation, state the objectives that it was used to investigate. (A) Scenario based analysis – Provide a name and description for each scenario, providing a rationale for the choice of scenarios and ensure that item 2.3 (below) is completed. (B) Design of experiments – Provide details of the overall design of the experiments with reference to performance measures and their parameters (provide further details in data below). (C) Simulation Optimisation – (if appropriate) Provide full details of what is to be optimised, the parameters that were included and the algorithm(s) that was be used. Where possible provide a citation of the algorithm(s). |

✅ Fully | All scenarios are described and justified.Results: “To investigate why a bottleneck exists at angioINR, we tested three scenarios with varying degrees of patient accessibility to angioINR. First, in the “exclusive-use” scenario, angioINR is not available for elective IR patients. Its use is restricted to stroke, elective INR and emergency IR patients. Second, in the “two angioINRs” scenario, the angioIR is replaced with an angioINR, doubling angiography availability for ECR patients. Lastly, in the “extended schedule” scenario, day time working hours of all human resources are extended by up to 2 h, extending resource access to all patients.”Results: Using DES to Predict Future Resource Usage: “Since acquiring data for this study, the demands for ECR at our Comprehensive Stroke Service has doubled between 2018 and 19 and is predicted to triple by the end of 2019. We simulated these increased demands on the resource.”Huang et al. (2019) |

| Logic | |||

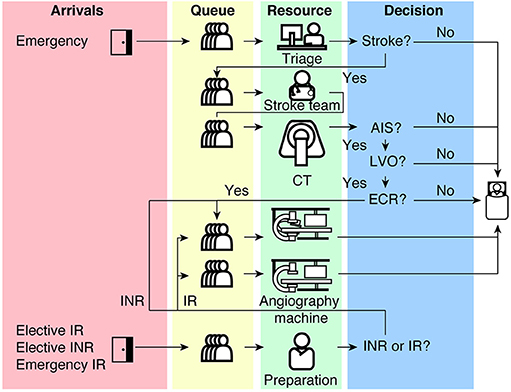

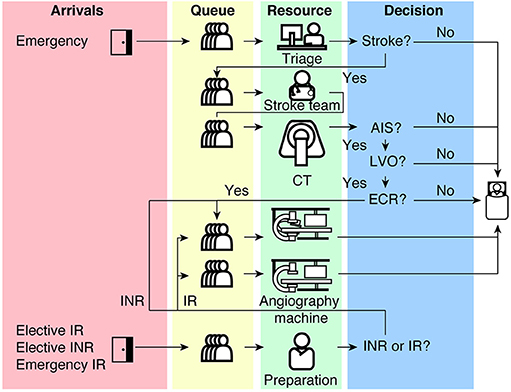

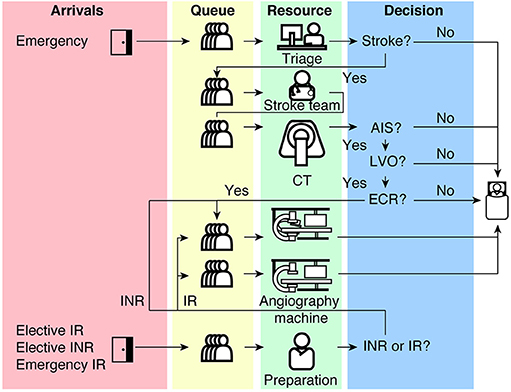

| 2.1 Base model overview diagram | Describe the base model using appropriate diagrams and description. This could include one or more process flow, activity cycle or equivalent diagrams sufficient to describe the model to readers. Avoid complicated diagrams in the main text. The goal is to describe the breadth and depth of the model with respect to the system being studied. | ✅ Fully | Figure 1: Huang et al. (2019) |

| 2.2 Base model logic | Give details of the base model logic. Give additional model logic details sufficient to communicate to the reader how the model works. | ✅ Fully | Detailed in Methods: Model Algorithm |

| 2.3 Scenario logic | Give details of the logical difference between the base case model and scenarios (if any). This could be incorporated as text or where differences are substantial could be incorporated in the same manner as 2.2. | ✅ Fully | As in 1.3. |

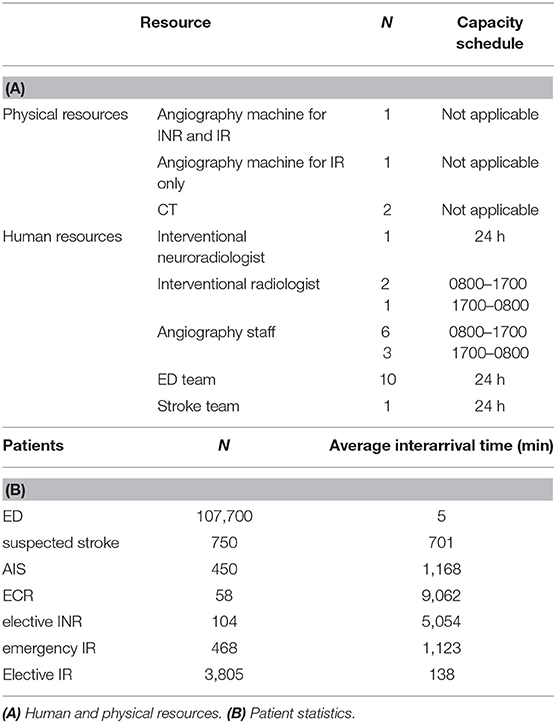

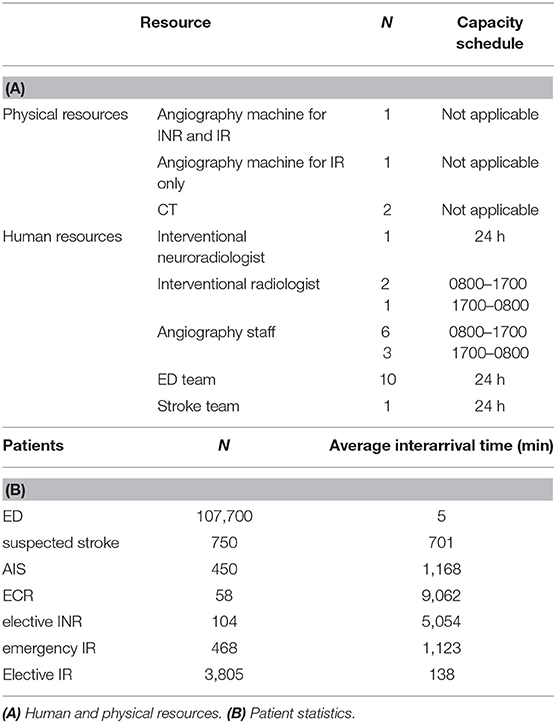

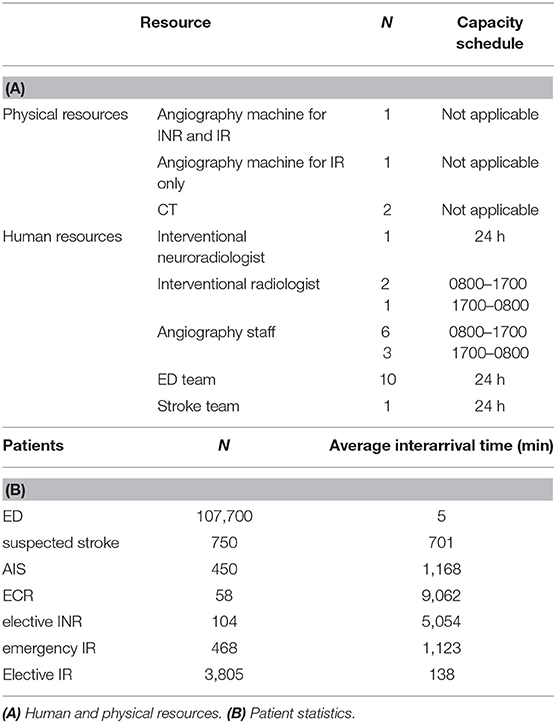

| 2.4 Algorithms | Provide further detail on any algorithms in the model that (for example) mimic complex or manual processes in the real world (i.e. scheduling of arrivals/ appointments/ operations/ maintenance, operation of a conveyor system, machine breakdowns, etc.). Sufficient detail should be included (or referred to in other published work) for the algorithms to be reproducible. Pseudo-code may be used to describe an algorithm. | 🟡 Partially | Methods: Model Properties: Patients: “Patients are generated by a Poissone process with an inter-arrival time as specified in Table 1.” Huang et al. (2019) Doesn’t describe some of the other processes from the code (e.g. sampling appointment length, or intricacies of how the suspected stroke / AIS / ECR are not directly inter-arrival time but instead probability based). |

| 2.5.1 Components - entities | Give details of all entities within the simulation including a description of their role in the model and a description of all their attributes. | ✅ Fully | Describes all four patient types in Methods: Model Algorithm - “(1) a stroke pathway, (2) an elective non-stroke interventional neuroradiology (elective INR) pathway, (3) an emergency interventional radiology (emergency IR) pathway and (4) an elective interventional radiology (elective IR) pathway.”Huang et al. (2019) |

| 2.5.2 Components - activities | Describe the activities that entities engage in within the model. Provide details of entity routing into and out of the activity. | ✅ Fully | Described in Methods: Model Algorithm and visualised in Figure 1. Huang et al. (2019) |

| 2.5.3 Components - resources | List all the resources included within the model and which activities make use of them. | ✅ Fully | Methods: “resources represent human and physical resources such as interventional radiologist (IR), interventional neuroradiologist (INR), stroke physician, nurse, radiology technologist, CT scanner, single plane (angioIR), and biplane (angioINR) angiography suites.”Used described in Methods: Model Algorithm and visualised in Figure 1. Huang et al. (2019) |

| 2.5.4 Components - queues | Give details of the assumed queuing discipline used in the model (e.g. First in First Out, Last in First Out, prioritisation, etc.). Where one or more queues have a different discipline from the rest, provide a list of queues, indicating the queuing discipline used for each. If reneging, balking or jockeying occur, etc., provide details of the rules. Detail any delays or capacity constraints on the queues. | ✅ Fully | Methods: Model Properties: Queueing: “In the real world, resources are preferentially given to emergency patients over elective or non-emergency patients. In our model, emergency IR and stroke patients have higher priority than elective patients for resources. Specifically, angioINRs are capable of both INR and IR procedures, although all patient types can utilize this resource, stroke patients have priority compared to other patient types. Emergency IR patients are next in line, followed by elective patients. For example, if a stroke patient and an emergency IR patient enter a queue with 10 elective patients for angioINR, the stroke patient will automatically be placed in front of the queue followed by the emergency IR patient. For an angiography machine for IR procedures only (angioIR), emergency IR patients have priority over elective IR patients. When no resources are available, but multiple resource choices are present, a patient automatically enters the resource queue with the least number of entities (i.e., the shortest queue).”Huang et al. (2019) |

| 2.5.5 Components - entry/exit points | Give details of the model boundaries i.e. all arrival and exit points of entities. Detail the arrival mechanism (e.g. ‘thinning’ to mimic a non-homogenous Poisson process or balking) | ✅ Fully | Easily understood from Figure 1. Huang et al. (2019) |

| Data | |||

| 3.1 Data sources | List and detail all data sources. Sources may include: • Interviews with stakeholders, • Samples of routinely collected data, • Prospectively collected samples for the purpose of the simulation study, • Public domain data published in either academic or organisational literature. Provide, where possible, the link and DOI to the data or reference to published literature. All data source descriptions should include details of the sample size, sample date ranges and use within the study. |

✅ Fully | Methods: Model Algorithm: “The decision to proceed to the next event is probabilistic and is acquired from logged data from a Comprehensive Stroke Service in Melbourne, Australia, between 2016 and 17”Model Properties: Patients: “Inter-arrival times are calculated from patient statistics which were obtained from logged data from a Comprehensive Stroke Service in Melbourne, Australia between 2016 and 17.”Huang et al. (2019) |

| 3.2 Pre-processing | Provide details of any data manipulation that has taken place before its use in the simulation, e.g. interpolation to account for missing data or the removal of outliers. | N/A | None provided, so presumed not applicable. |

| 3.3 Input parameters | List all input variables in the model. Provide a description of their use and include parameter values. For stochastic inputs provide details of any continuous, discrete or empirical distributions used along with all associated parameters. Give details of all time dependent parameters and correlation. Clearly state: • Base case data • Data use in experimentation, where different from the base case. • Where optimisation or design of experiments has been used, state the range of values that parameters can take. • Where theoretical distributions are used, state how these were selected and prioritised above other candidate distributions. |

🟡 Partially | Many are provided in Table 1, although some parameters are not described (e.g. length of time with resources) Huang et al. (2019) |

| 3.4 Assumptions | Where data or knowledge of the real system is unavailable what assumptions are included in the model? This might include parameter values, distributions or routing logic within the model. | ❌ Not met | Cannot identify in paper. |

| Experimentation | |||

| 4.1 Initialisation | Report if the system modelled is terminating or non-terminating. State if a warm-up period has been used, its length and the analysis method used to select it. For terminating systems state the stopping condition. State what if any initial model conditions have been included, e.g., pre-loaded queues and activities. Report whether initialisation of these variables is deterministic or stochastic. |

❌ Not met | Not described. |

| 4.2 Run length | Detail the run length of the simulation model and time units. | ✅ Fully | Methods: Statistics and Software: “Each scenario has a runtime of 365 days”Huang et al. (2019) |

| 4.3 Estimation approach | State the method used to account for the stochasticity: For example, two common methods are multiple replications or batch means. Where multiple replications have been used, state the number of replications and for batch means, indicate the batch length and whether the batch means procedure is standard, spaced or overlapping. For both procedures provide a justification for the methods used and the number of replications/size of batches. | 🟡 Partially | Number of replications stated but not justified.Methods: Statistics and Software: “Each scenario… was simulated 30 times”Huang et al. (2019) |

| Implementation | |||

| 5.1 Software or programming language | State the operating system and version and build number. State the name, version and build number of commercial or open source DES software that the model is implemented in. State the name and version of general-purpose programming languages used (e.g. Python 3.5). Where frameworks and libraries have been used provide all details including version numbers. |

🟡 Partially | Some details provided - Methods: Statistics and Software: “The DES model was built with Simmer (version 4.1.0), a DES package for R. The interactive web application was built with R-Shiny”Huang et al. (2019) |

| 5.2 Random sampling | State the algorithm used to generate random samples in the software/programming language used e.g. Mersenne Twister. If common random numbers are used, state how seeds (or random number streams) are distributed among sampling processes. |

❌ Not met | Doesn’t mention algorithm or whether seeds or streams are used (know from code that it does not though). |

| 5.3 Model execution | State the event processing mechanism used e.g. three phase, event, activity, process interaction. Note that in some commercial software the event processing mechanism may not be published. In these cases authors should adhere to item 5.1 software recommendations. State all priority rules included if entities/activities compete for resources. If the model is parallel, distributed and/or use grid or cloud computing, etc., state and preferably reference the technology used. For parallel and distributed simulations the time management algorithms used. If the HLA is used then state the version of the standard, which run-time infrastructure (and version), and any supporting documents (FOMs, etc.) |

🟡 Partially | Does not state event processing mechanism. Does describe priority rules - Methods: Model Properties: Queueing - e.g. “n our model, emergency IR and stroke patients have higher priority than elective patients for resources. Specifically, angioINRs are capable of both INR and IR procedures, although all patient types… |

| 5.4 System specification | State the model run time and specification of hardware used. This is particularly important for large scale models that require substantial computing power. For parallel, distributed and/or use grid or cloud computing, etc. state the details of all systems used in the implementation (processors, network, etc.) | ❌ Not met | - |

| Code access | |||

| 6.1 Computer model sharing statement | Describe how someone could obtain the model described in the paper, the simulation software and any other associated software (or hardware) needed to reproduce the results. Provide, where possible, the link and DOIs to these. | ✅ Fully | Methods: “The source code for the model is available at https://github.com/shiweih/desECR under a GNU General Public License.”Methods: Statistics and Software: “DES model was built with Simmer (version 4.1.0), a DES package for R. The interactive web application was built with R-Shiny”Discussion: “The model is currently available online at https://rebrand.ly/desECR11” Huang et al. (2019) |

DES checklist derived from ISPOR-SDM

Of the 18 items in the checklist:

- 7 were met fully (✅)

- 2 were partially met (🟡)

- 7 were not met (❌)

- 2 were not applicable (N/A)

| Item | Assessed if… | Met by study? | Evidence/location |

|---|---|---|---|

| Model conceptualisation | |||

| 1 Is the focused health-related decision problem clarified? | …the decision problem under investigation was defined. DES studies included different types of decision problems, eg, those listed in previously developed taxonomies. | ✅ Fully | ECR resource utilisation, as in Introduction. |

| 2 Is the modeled healthcare setting/health condition clarified? | …the physical context/scope (eg, a certain healthcare unit or a broader system) or disease spectrum simulated was described. | ✅ Fully | Implicit that it is a single hospital, and the relevant pathways for different patient types are described in the Methods: Model Algorithm. |

| 3 Is the model structure described? | …the model’s conceptual structure was described in the form of either graphical or text presentation. | ✅ Fully | Described in Methods: Model Algorithm and visualised in Figure 1: Huang et al. (2019) |

| 4 Is the time horizon given? | …the time period covered by the simulation was reported. | ✅ Fully | Methods: Statistics and Software: “Each scenario has a runtime of 365 days”Huang et al. (2019) |

| 5 Are all simulated strategies/scenarios specified? | …the comparators under test were described in terms of their components, corresponding variations, etc | ✅ Fully | All scenarios are specified.Results: “To investigate why a bottleneck exists at angioINR, we tested three scenarios with varying degrees of patient accessibility to angioINR. First, in the “exclusive-use” scenario, angioINR is not available for elective IR patients. Its use is restricted to stroke, elective INR and emergency IR patients. Second, in the “two angioINRs” scenario, the angioIR is replaced with an angioINR, doubling angiography availability for ECR patients. Lastly, in the “extended schedule” scenario, day time working hours of all human resources are extended by up to 2 h, extending resource access to all patients.”Results: Using DES to Predict Future Resource Usage: “Since acquiring data for this study, the demands for ECR at our Comprehensive Stroke Service has doubled between 2018 and 19 and is predicted to triple by the end of 2019. We simulated these increased demands on the resource.”Huang et al. (2019) |

| 6 Is the target population described? | …the entities simulated and their main attributes were characterized. | ❌ Not met | - |

| Paramaterisation and uncertainty assessment | |||

| 7 Are data sources informing parameter estimations provided? | …the sources of all data used to inform model inputs were reported. | ✅ Fully | Methods: Model Algorithm: “The decision to proceed to the next event is probabilistic and is acquired from logged data from a Comprehensive Stroke Service in Melbourne, Australia, between 2016 and 17”Model Properties: Patients: “Inter-arrival times are calculated from patient statistics which were obtained from logged data from a Comprehensive Stroke Service in Melbourne, Australia between 2016 and 17.”Huang et al. (2019) |

| 8 Are the parameters used to populate model frameworks specified? | …all relevant parameters fed into model frameworks were disclosed. | 🟡 Partially | Many are provided in Table 1, although some parameters are not described (e.g. length of time with resources) Huang et al. (2019) |

| 9 Are model uncertainties discussed? | …the uncertainty surrounding parameter estimations and adopted statistical methods (eg, 95% confidence intervals or possibility distributions) were reported. | ❌ Not met | - |

| 10 Are sensitivity analyses performed and reported? | …the robustness of model outputs to input uncertainties was examined, for example via deterministic (based on parameters’ plausible ranges) or probabilistic (based on a priori-defined probability distributions) sensitivity analyses, or both. | ❌ Not met | Does mention in the Discussion that “The quality of the ECR service appears to be robust to important parameters, such as the number of radiologists”, but no sensitivity analysis is reported |

| Validation | |||

| 11 Is face validity evaluated and reported? | …it was reported that the model was subjected to the examination on how well model designs correspond to the reality and intuitions. It was assumed that this type of validation should be conducted by external evaluators with no stake in the study. | ❌ Not met | - |

| 12 Is cross validation performed and reported | …comparison across similar modeling studies which deal with the same decision problem was undertaken. | ❌ Not met | - |

| 13 Is external validation performed and reported? | …the modeler(s) examined how well the model’s results match the empirical data of an actual event modeled. | N/A | Discussion: “In general, a limitation of the current implementation is that few measurements exist to parameterize or validate many aspects of the simulation, because such records are not routinely kept. However, explicitly modeling the workflow can allow administrators to keep track of key parameters and performance, improving the model over time.”Huang et al. (2019) |

| 14 Is predictive validation performed or attempted? | …the modeler(s) examined the consistency of a model’s predictions of a future event and the actual outcomes in the future. If this was not undertaken, it was assessed whether the reasons were discussed. | N/A | This is only relevant to forecasting models |

| Generalisability and stakeholder involvement | |||

| 15 Is the model generalizability issue discussed? | …the modeler(s) discussed the potential of the resulting model for being applicable to other settings/populations (single/multiple application). | ✅ Fully | Discussion: “The quality of the ECR service appears to be robust to important parameters, such as the number of radiologists. The simulation findings apply to ECR services that can be represented by the model in this study. As such, utilization of this model to its maximum capacity requires tailoring the model to local needs, as institutional bottlenecks differ between providers. We specifically developed this model using an open source programming language so that the source code can serve as a basis for future model refinement and modification.”Huang et al. (2019) |

| 16 Are decision makers or other stakeholders involved in modeling? | …the modeler(s) reported in which part throughout the modeling process decision makers and other stakeholders (eg, subject experts) were engaged. | ❌ Not met | - |

| 17 Is the source of funding stated? | …the sponsorship of the study was indicated. | ❌ Not met | - |

| 18 Are model limitations discussed? | …limitations of the assessed model, especially limitations of interest to decision makers, were discussed. | 🟡 Partially | Does mention a general limitation, but I don’t feel limitations were explored in as much detail as they could be.Discussion: “In general, a limitation of the current implementation is that few measurements exist to parameterize or validate many aspects of the simulation, because such records are not routinely kept. However, explicitly modeling the workflow can allow administrators to keep track of key parameters and performance, improving the model over time.”Huang et al. (2019) |

References

Huang, Shiwei, Julian Maingard, Hong Kuan Kok, Christen D. Barras, Vincent Thijs, Ronil V. Chandra, Duncan Mark Brooks, and Hamed Asadi. 2019. “Optimizing Resources for Endovascular Clot Retrieval for Acute Ischemic Stroke, a Discrete Event Simulation.” Frontiers in Neurology 10 (June). https://doi.org/10.3389/fneur.2019.00653.

Monks, Thomas, Christine S. M. Currie, Bhakti Stephan Onggo, Stewart Robinson, Martin Kunc, and Simon J. E. Taylor. 2019. “Strengthening the Reporting of Empirical Simulation Studies: Introducing the STRESS Guidelines.” Journal of Simulation 13 (1): 55–67. https://doi.org/10.1080/17477778.2018.1442155.

Zhang, Xiange, Stefan K. Lhachimi, and Wolf H. Rogowski. 2020. “Reporting Quality of Discrete Event Simulations in Healthcare—Results From a Generic Reporting Checklist.” Value in Health 23 (4): 506–14. https://doi.org/10.1016/j.jval.2020.01.005.